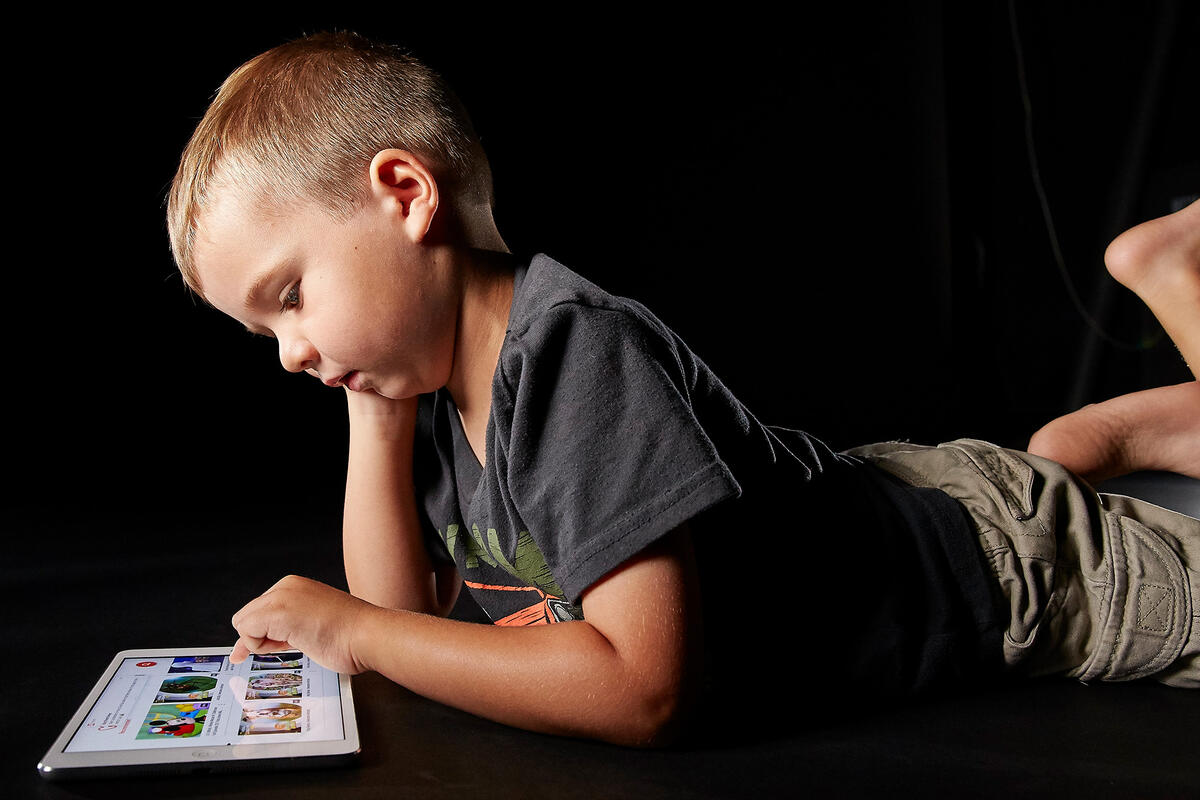

Benjamin Burroughs spends hours studying children's videos on YouTube. A father of three kids who teaches and researches how technology shapes people's lives, Burroughs is fascinated by the growing number of fun, elaborate entertainment videos aimed at babies, toddlers, and children up to 5 years old. The videos may offer parents a break and provide young minds with stimulating content. However, many of the videos Burroughs explores also include either explicit or masked advertising, and the mobile applications used to view them create a perfect environment for targeted advertising and data tracking.

Burroughs, an assistant professor in journalism and media studies, published a research article on this phenomenon this past May. The article, “YouTube Kids: The App Economy and Mobile Parenting” appears in the journal Social Media + Society.

Burroughs talked with us about how these videos and applications work, their benefits and risks, and how mobile entertainment options influence parenting. After watching his children learn to swipe through mobile applications, he’d like to foster a dialog on these new and growing spaces.

What first interested you in the role media and apps plays in people's lives?

I've grown up using media, and it was very apparent to me that social media was going to be kind of a big deal. If you want to understand digital culture, social media is increasingly not just digital culture but culture in general. It is something that is integral to fields like business, journalism, and just the way we live our lives. It increases the penetration of media into our lives, and things like businesses' usage of social media increases their presence in our lives.

What’s so special about mobile apps, specifically?

Everybody has a smartphone, and with smartphone technology, apps become a really important part of connecting to content. Apps are big business. They really connect us to specific forms of content, and they become powerful in the way they contain that specific connection. If you build an app that's specifically developed, it can be a big source of income, engagement, and all that. Apps can also be a really good source of user data.

And they open up targeted markets to advertisers, like kids watching videos?

We've always had advertising that's tried to target children, but this idea of building a data profile and having children be a segment of the market is newer. The ways in which advertisers and companies are connecting with that target demographic is different than before, and the reach that these companies have through these newer technologies is different than it used to be.

It used to be with advertisements, "OK, go get your parents to buy something," but now, they want a 0-to-4-year-old's engagement. Netflix, Amazon, Hulu — they're all developing content specifically for this demographic. A large portion of YouTube's growth is in this market, which is fascinating.

One ethical issue is that advertising can sneak into content ...

Some of the most popular videos for young children now are in the unboxing genre, which involves people, who may or may not be anonymous with only their hands showing, "unboxing" or opening toys and other products then creatively explaining them.

However, many people don't know that a lot of the folks who are doing the unboxing are getting paid to unbox or promote, whether it's intentional or subconsciously, that product that they're unboxing. The product might be provided for free or the person might be paid. So people are getting paid to do this, and they’re making a lot of money.

The entire phenomenon raises questions about parenting in this era...

I come at this topic as a parent who is trying to understand these new developments. How does this change parenting? What does it do to the relationship of a child to media, and what does it do to the relationship of a child to advertising? Are we OK with that level of pervasive advertising being embedded in the lives of our children that young? There are privacy concerns, concerns about the building of data profiles for children — there are a lot of concerns in terms of privacy, safety, and security. But you have to balance that against the positives that go along with digital technology. There's a lot of educational value here. Children can learn in ways they can't in other spaces, learning can be tailored to them, communities can form.

The YouTube Kids app is a popular example of something tailored to kids...

YouTube obviously saw the development of a kids’ app as a big moneymaker, but they wanted to make sure it was family friendly and that parents would be OK with it. YouTube's kids app provides this cordoned off, well-guarded space that is apart from the YouTube platform and where they can filter content and only provide videos that are appropriate for that age group, based on algorithms. It's advantageous because they can advertise directly to children and they know that children are in that space.

The data would be valuable because it tells you what kids are interested in right away, but also, it tells you how to develop products for children. YouTube has gone to great lengths now in their terms and services to say that the data won't be stored or used for nefarious purposes, but it's only a matter of time before these types of apps do, in one way or another, start to keep track.

And the app has some control over what the child watches...

Before this technology, parents could have control over what children would watch just by saying, "OK, there's a schedule, you can watch this, you can watch that." And of course, not all parents were actively involved in the lives of their children. Some would just sit kids in front of the TV and they could watch Saturday morning cartoons.

But now, based on the very first thing you watch, the app generates a list of suggestions and can just jump from video to video in the sequence if the parent hasn't prescheduled content. I think that's interesting because the parent's role is lessened in deciding which content the child should or shouldn't watch. Even myself, I try to be an active participant when my children are watching things, but I know that I'm not able to program that algorithm. I'm not able to say to the program that it cannot jump to or suggest this or that kind of content when I haven’t scheduled content, and I think the concern is that we're increasingly ceding parenting control to those algorithms and technology.

What can be done?

The reason why for all those years we've had these restrictions on advertising and children is because children have been shown to be extremely impressionable at these young ages, so collectively we have to ask: Is there an age where too much advertising penetrating into the lives of these children is problematic? If so, we need to apply more regulation to the space.

I always encourage parents of any age to play with the technology themselves, to download the apps and experience them. Be aware of what the privacy settings are. Be aware of the control, however limited it is, that the app is giving you of the data. Sit down and watch the content yourself.

Also, I think it's a good idea to view browsing history as much as possible. Have discussions with children about the content they're watching and empower the child, if you feel like it's too much advertising or too much that you've deemed negative content. Have an open dialog, even with young children, about how they feel and how that content makes them think about the world or certain brands, just so that they can start to become critical thinkers.